04. AI Life Cycle Focus

4.1 AI Controls within the AI Life Cycle

There is no single path or procedure to deploy AI use cases. The actions to consider vary too broadly across organizational makeup (size and complexity), use case, regulatory regime, and risk tolerance. But there are a number of high-level operating components that appear to be influencing best practice approaches. These components are being championed by a variety of sources including:

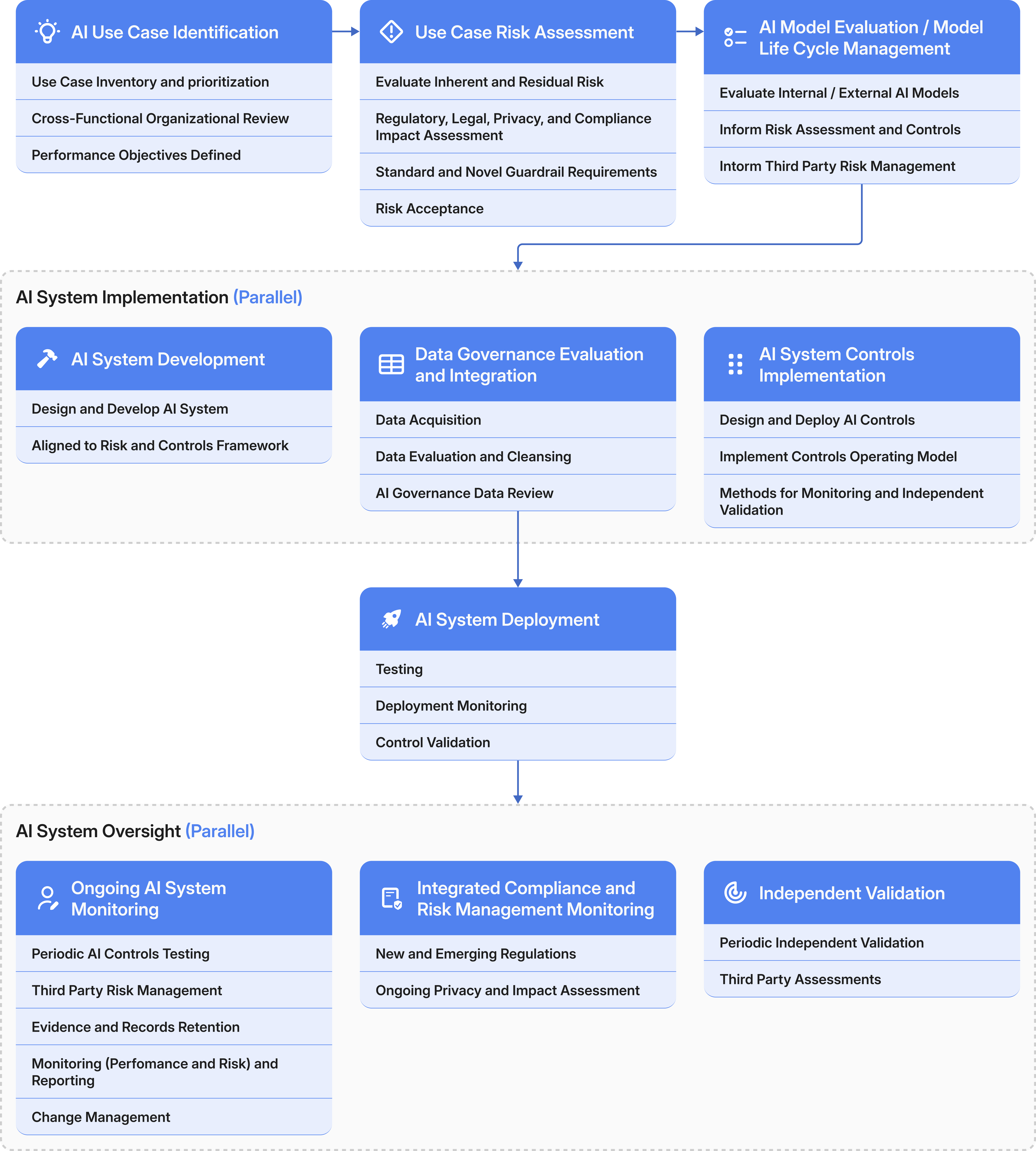

The AI lifecycle in Section 4.2 is presented to help guide where the individual controls set forth in Section 6, 7, and 8 are required when looking to deploy AI across an enterprise.

4.2 AI Life Cycle Diagram

4.3 Key Roles and Responsibilities

While there is no standard set of roles and responsibilities across each enterprise or industry, there is a set of standard functional roles that are critical to AI lifecycle execution and oversight. For purposes of this document and the detailed control inventory set out in Sections 6, 7, and 8, the following are critical roles for effective implementation.

Product Stakeholders: Stakeholders responsible for defining the vision, functionality, and business priority for the AI system. This set of stakeholders within an organization is ultimately responsible for the success of the AI system and achieving the business objective. These stakeholders partner with other stakeholders (technology, risk management) to design and deploy the AI system.

Technology Development Stakeholders: Stakeholders responsible for the technical design, development, integration, and maintenance of the AI system. This set of stakeholders is also focused on application performance, scalability, testing, reliability, and ensuring the technology satisfies Product stakeholder requirements. This set of stakeholders also contains experts in machine learning and large language models.

Information Security & Privacy Stakeholders: Stakeholders responsible for ensuring that people, process, and technology are in place to safeguard against information security and cyber security threats and satisfy privacy requirements. These stakeholders identify vulnerabilities, partner with others in the enterprise to build robust security measures, design and support deployment of privacy controls, and work to satisfy compliance requirements for AI systems.

Risk Management Stakeholders: Stakeholders responsible for identifying and assessing the risk of AI deployments for the organization. Risk Management stakeholders are often identified by their specific risk expertise and span a broad variety of risk types including technology, data, operational, model, compliance, legal, strategic, reputational, and financial risk among others. Risk management stakeholders partner with other stakeholders to identify controls that mitigate identified risks, and facilitate any risk acceptance processes.

Legal & Compliance Stakeholders: Stakeholders with legal and compliance requirements expertise that help guide design, development, and oversight of an AI system. These stakeholders identify applicable rules, laws, and regulations pertinent to the AI use case and partner to establish people, process, and technology controls to satisfy and monitor the AI use case in order to satisfy identified requirements and validate compliance.

Audit Stakeholders: Stakeholders responsible for independently evaluating the AI system to assess its functionality, performance, and alignment with risk and internal policy objectives. These stakeholders may be employees within an enterprise or a third-party may be identified to perform an independent evaluation on behalf of the enterprise.

.webp)