01. Overview

1.1 Introduction

Since the mainstream advancement of generative artificial intelligence (AI) in late 2022, governments, enterprises, and trade associations have put forth high-level guidance focused on deploying AI in a safe and secure manner. The reasons for doing so are clear, as the impacts of AI will be felt across People and Planet,1 and therefore must have a level of governance, mapping, measuring, and managing AI risk that is appropriately aligned to the expected level of impact across society.

At the same time, governments have advanced AI policy, with regulation from the European Union (EU), principles from Australia2 and the United Kingdom,3 as well as laws passed by individual states within the United States (New York, Colorado, and Utah to name a few). And while clarity regarding principled use is required, a core barrier to AI enablement for most enterprises is best practice methods to implement real-world AI controls that mitigate risks inherent to AI.

Best practice guidance that includes methods, pitfalls, expectations on observability and evidence, to drive the assessments of AI residual risk and promote effective decision-making and auditability.

The Dynamo AI Guardrail Playbook (Playbook) is meant to satisfy this gap. This Playbook provides a comprehensive overview of how best to create, evaluate, and monitor AI guardrails, critical post-deployment AI controls that are specifically focused on mitigating risks that result from the use, or interaction with, AI including Large Language Models (LLM) in the context of operating a regulated enterprise. The controls, evidence, and monitoring best-practices all aim to satisfy compliance with internal policy, rules, laws, and regulations related to AI use case deployment. The Playbook is also meant to be understood by both technical and non-technical stakeholders and be delivered in an ‘easy-to-understand’ way. Ultimately, this Playbook seeks to enable AI across a variety of use cases, in a safe and sound manner.

The Playbook documents specific controls and associated control execution and monitoring requirements to evaluate and mitigate risks inherent in the use of AI as part of a deployed use case.

1.2 Scope

The Playbook does not aim to cover or include:

- A holistic AI risk management framework, progressed by organizations including the National Institute of Standards and Technology, International Organization for Standardization (ISO), and the Organization for Economic Cooperation and Development (OECD).

- A focus on singular risk stripes such as Information Security or Privacy (progressed by organizations such as the Open Worldwide Application Security Project (OWASP) or The MITRE Corporation).

- End-to-end AI systems development (Section 4 provides a reference to relevant processes).

- Validation or mappings to regulations that may be required for a specific AI use case in a market or region.

1.3 About Dynamo AI

Founded in 2021 by a team of Ph.D.’s from the Massachusetts Institute of Technology (MIT) and security experts at the forefront of compliant AI, Dynamo AI enables enterprises to deploy safe, secure, and compliant AI systems at scale.

Dynamo AI’s solutions are designed to address the risks and challenges with adopting generative AI where security and compliance is paramount. The Dynamo AI platform is distinguished as the first end-to-end compliant generative AI infrastructure specifically engineered for large-scale deployment across industries. Dynamo AI’s product suite can be run either in major virtual private clouds, on-premises, or on edge devices.

Dynamo AI is backed by organizations including Y-Combinator and Canapi Ventures (a consortium of 40 of the top 100 US financial institutions). This consortium of financial institutions partner with Dynamo AI to enable high-value AI use in environments that require a high level of governance and control.

1.4 Unique AI Control Insight

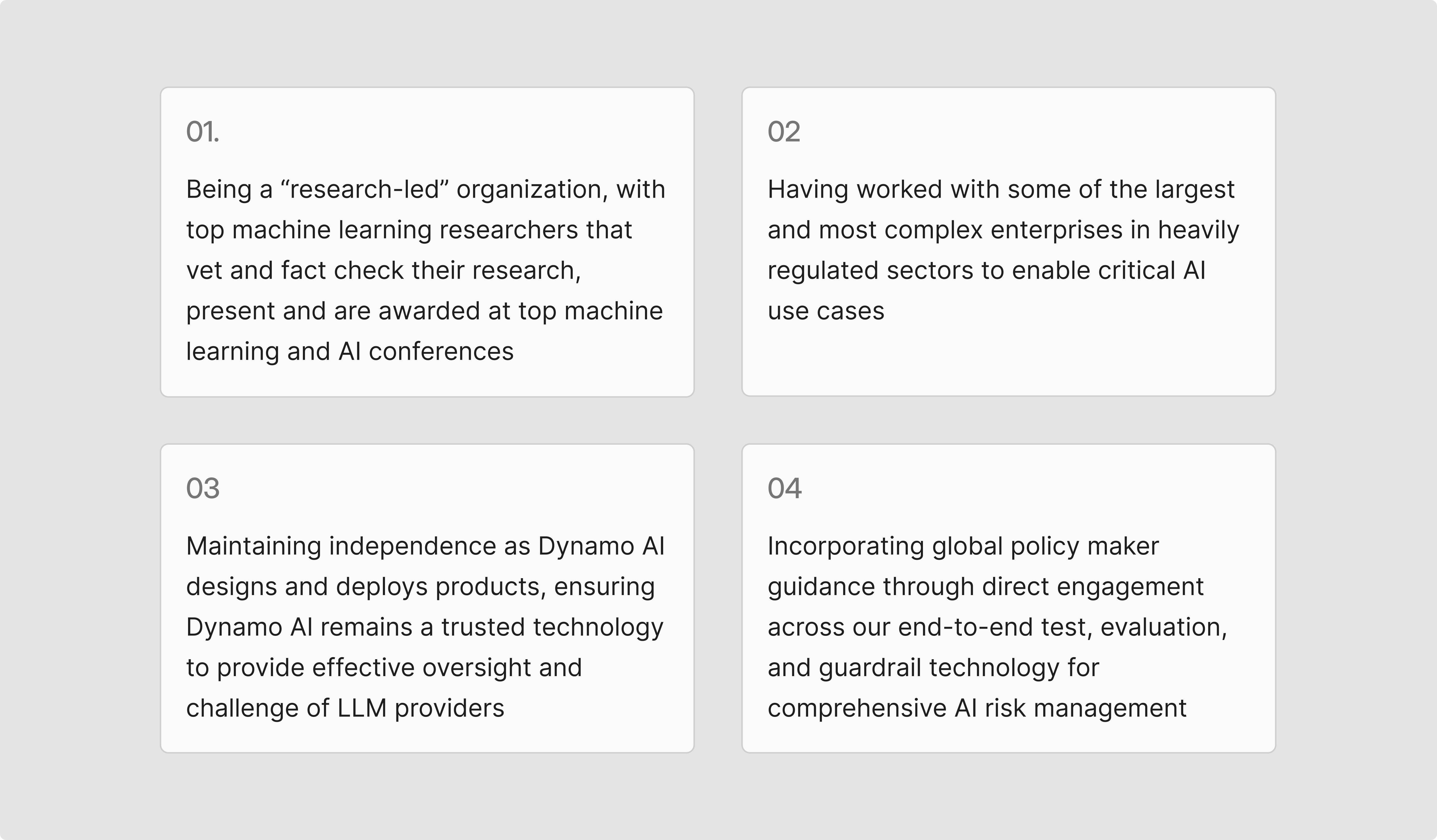

Dynamo AI‘s mission “Empower every organization to harness AI’s transformative potential with confidence and control” requires that it be at the forefront of AI evaluation research, which is embedded into the culture of our organization. To design, develop, deploy, and oversee a comprehensive GenAI control suite, a number of critical components must be in place. For Dynamo AI, those include:

It is through this unique combination of developing advanced AI test, evaluation, and guard railing technology, in-house AI expertise, early adopter client engagement, and requirements for best practice within the current cycle of AI advancement that makes Dynamo AI guidance critical for enterprises at this moment in time.

.webp)