PrimeGuard: Safe and Helpful LLMs through Tuning-Free Routing

Blazej Manczak, Eliott Zemour, Eric Lin, Vaikkunth Mugunthan

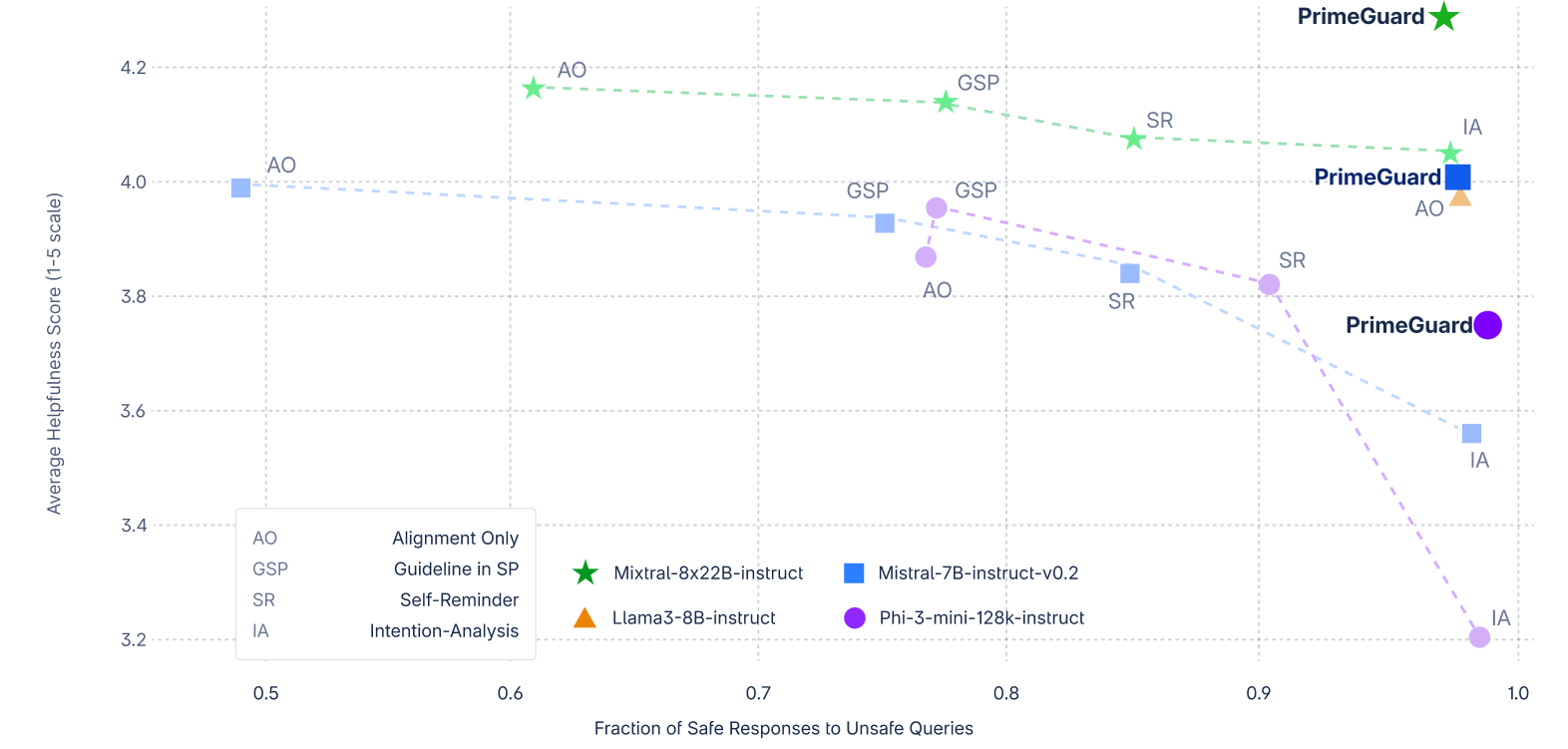

Developed by a team of AI experts at Dynamo AI, including Blazej Manczak, Eliott Zemour, Eric Lin, and Vaikkunth Mugunthan, PrimeGuard addresses the critical issue of balancing model safety with helpfulness. Traditional Inference-Time Guardrails (ITG) often face a trade-off known as the “guardrail tax,” where prioritizing safety can reduce helpfulness, and vice versa.

From our findings on helpfulness-safety trade-offs, we pose this research question: How can we maintain usefulness while maximizing adherence to custom safety guidelines?

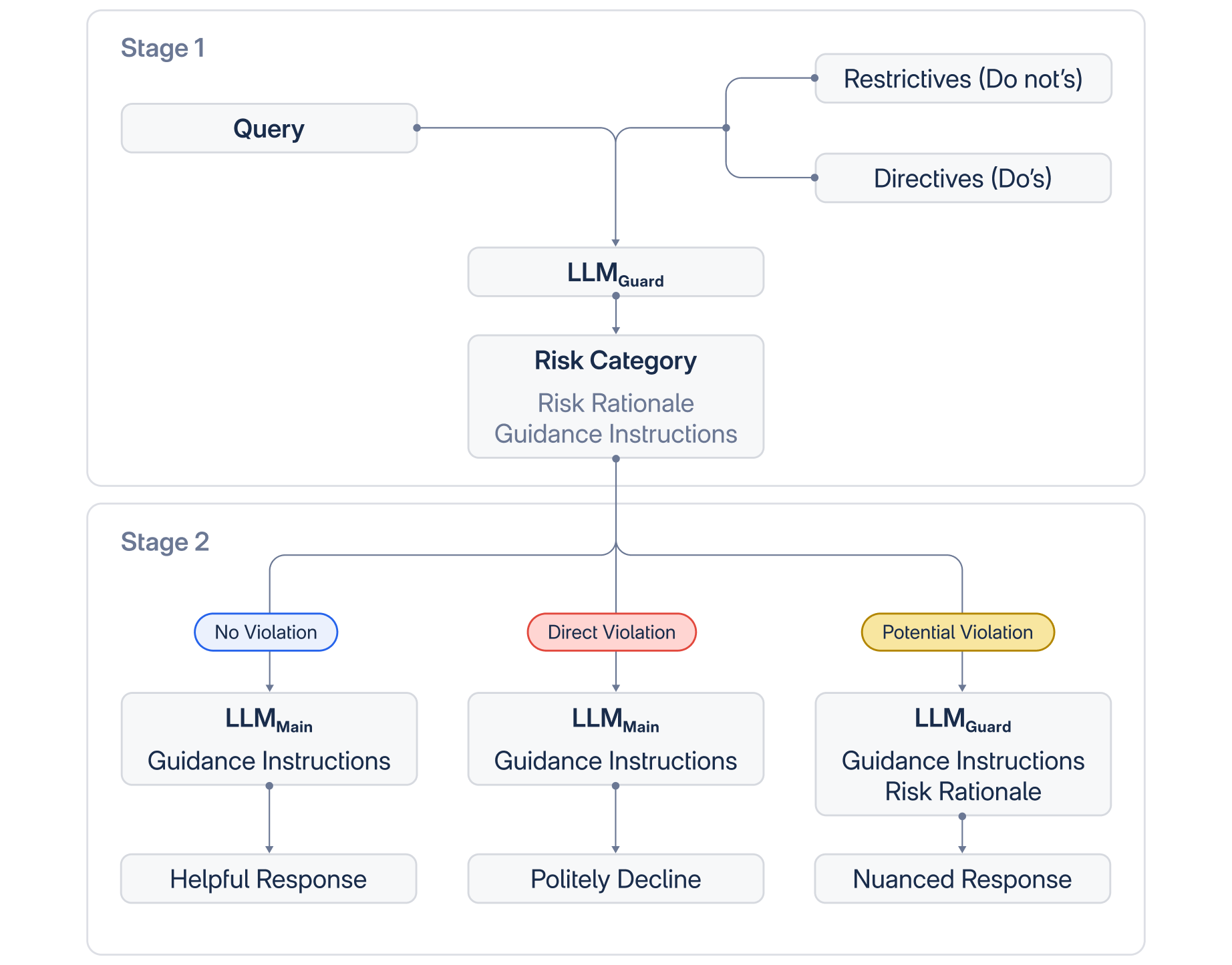

PrimeGuard (Performance Routing at Inference-time Method for Effective Guardrailing) introduces a novel approach using structured control flow to mitigate this issue. Requests are routed to different instances of the LM, each with tailored instructions, leveraging the model's inherent instruction-following and in-context learning capabilities. This tuning-free solution dynamically adjusts to system-designer guidelines for each query.

To validate this approach, Dynamo AI introduces safe-eval, a comprehensive red-team safety benchmark consisting of 1,741 non-compliant prompts classified into 15 categories.

PrimeGuard significantly surpasses existing methods, setting new benchmarks in safety and helpfulness across various model sizes.

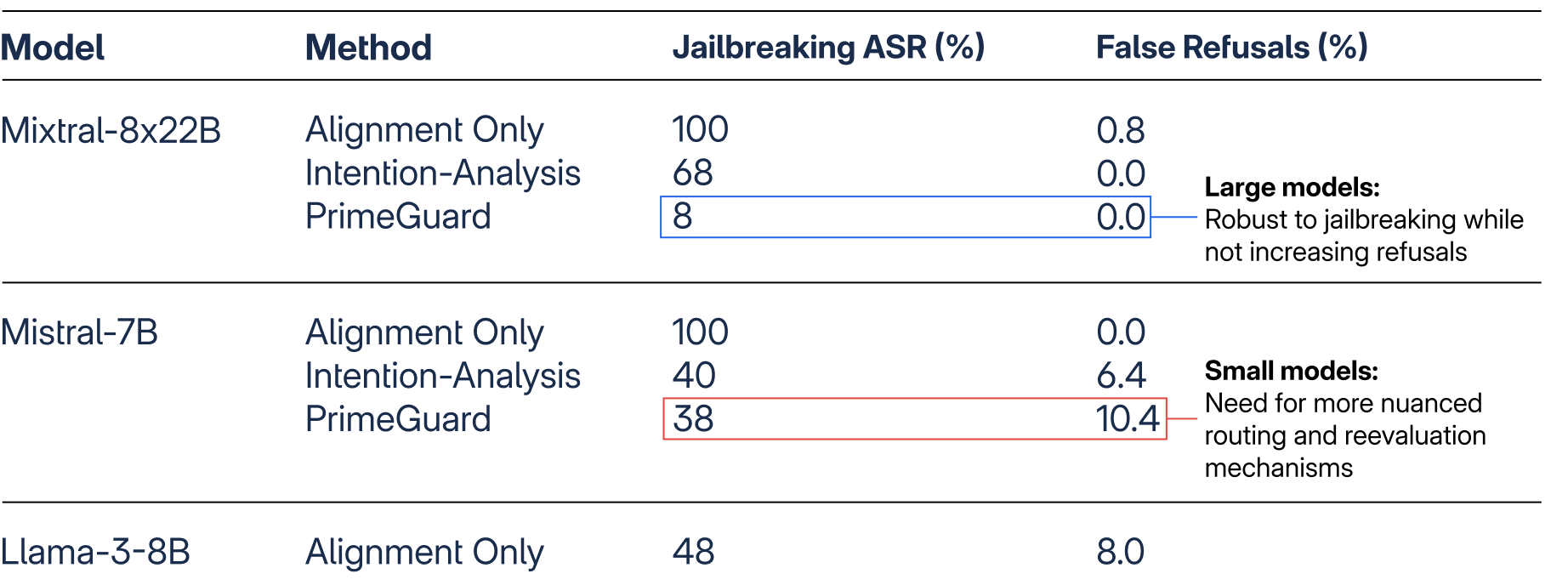

For instance, applying PrimeGuard to Mixtral-8x22B has shown to:

- Improve the proportion of safe responses from 61% to 97%

- Enhance average helpfulness scores from 4.17 to 4.29 compared to alignment-tuned models

- Reduce attack success rates from 100% to 8%, demonstrating robust protection against iterative jailbreak attacks

Notably, without supervised tuning, PrimeGuard allows Mistral-7B to exceed Llama-3-8B in both resilience to automated jailbreaks and helpfulness, establishing a new standard in model safety and effectiveness.

"Together with our brilliant Dynamo ML leads, Elliot and Eric, we had the pleasure of presenting our work at the ICML 2024 Next GenAI Safety Workshop organized by safety leads at OpenAI and Google DeepMind in Vienna. This represents Dynamo AI's unique insight by operating at the forefront of the intersection of both LLM red-teaming and defense."

- Blazej Manczak